An Evolution of Parallel Pipeline System with the Classification of Operation Code

Pipeline System with the Classification of Operation Code

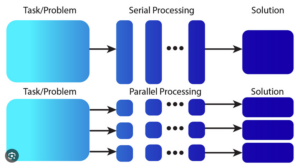

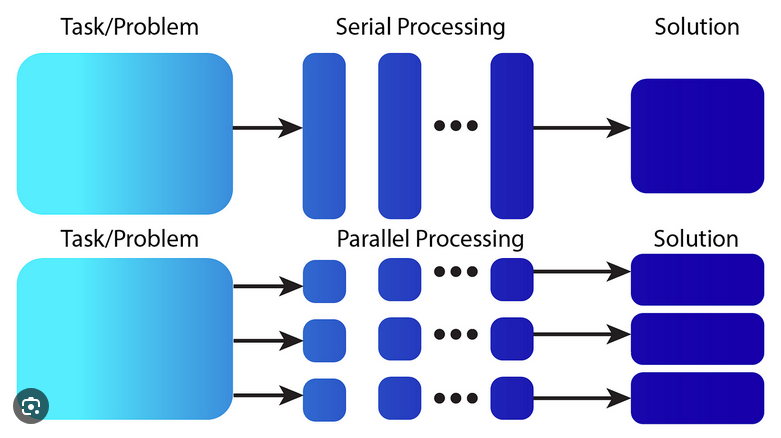

In the rapidly evolving world of high-performance computing, the parallel pipeline system has become a foundational architecture. It delivers unparalleled speed by allowing multiple instructions to process at once—a stark contrast to traditional, sequential processors.

Unlike linear designs, these systems operate with intelligent classification of operation codes—also known as opcodes. This enables balanced workload distribution and peak throughput, even in complex environments. Through this article, we’ll explore how opcode classification is transforming processor architecture and why it matters in today’s tech landscape.

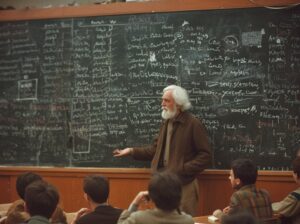

Understanding the Concept of Pipeline Architecture

Pipeline architecture works much like a production line, where instructions are broken into stages: Fetch, Decode, Execute, Memory Access, and Write Back. Each stage handles a part of a different instruction simultaneously.

This overlapping execution eliminates idle time and keeps the processor in constant motion. The result is a significant boost in instruction throughput, crucial in both general-purpose and domain-specific CPUs.

The Need for Parallelism

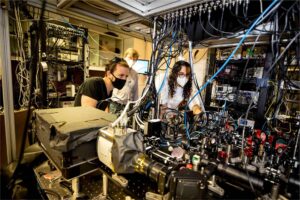

The rise of AI, real-time analytics, and scientific modeling has pushed traditional CPUs to their limits. To meet this challenge, modern architecture embraces parallelism.

By enabling multiple execution units to run simultaneously, parallel pipelines reduce bottlenecks and increase efficiency. This kind of parallelism isn’t just an option—it’s a necessity for performance-centric computing.

Stages of Evolution in Pipeline Processing

Early pipelines executed instructions linearly. As chip design improved, instruction-level parallelism (ILP) emerged, enabling CPUs to handle more than one instruction per cycle.

Later came superscalar and VLIW architectures, which supported multiple execution units. Today’s CPUs go even further, using out-of-order execution and speculative branching to anticipate and pre-process instruction paths—cutting delays and boosting speed.

The evolution of pipeline architecture reflects decades of innovation, each stage building on the last to push the boundaries of performance.

What Are Operation Codes (Opcodes)?

Opcodes are the backbone of processor instructions. Each one tells the CPU what kind of operation to perform—be it arithmetic, data movement, or control flow.

In pipeline systems, categorizing opcodes is essential. Efficient classification helps distribute instructions across execution units, reducing pipeline stalls and ensuring smooth operation.

Classification of Operation Codes

Operation codes fall into distinct categories:

Arithmetic: Operations like ADD, SUB, MUL, DIV handled by the ALU.

Logical: Bitwise instructions such as AND, OR, XOR.

Data Transfer: Instructions like LOAD, STORE, MOVE.

Control Transfer: JUMP, CALL, RETURN—managing program flow.

Input/Output: Handling interactions with peripherals.

Classifying these codes enables better scheduling and resource management, which is vital for high-speed execution.

Parallelism Techniques in Modern Pipeline Systems

To maximize throughput, modern processors implement:

Instruction-level parallelism (ILP) – executing non-dependent instructions simultaneously.

Data-level parallelism (DLP) – running identical operations across datasets.

Thread-level parallelism (TLP) – distributing workloads across multiple threads and cores.

These layers of parallelism often coexist, unlocking massive computational power in today’s CPUs.

Hazards in Pipeline Systems

Parallel pipelines face several hazards:

Data hazards occur when instruction dependencies overlap.

Structural hazards arise when multiple instructions need the same hardware.

Control hazards happen due to unpredictable branch behavior.

Solutions like forwarding, stalling, and branch prediction are used to handle these efficiently.

Case Study: Parallel Pipelines in Intel and AMD CPUs

Intel’s latest Core CPUs (like i9) use up to 20 pipeline stages, micro-op fusion, dynamic scheduling, and aggressive branch prediction. AMD’s Ryzen and EPYC lines leverage chiplet architecture, return stack buffers, and efficient multi-threading.