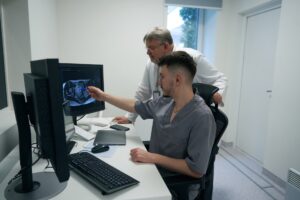

Ethical and Legal Accountability of AI Decisions in Clinical Care

AI integration into medicine seems to automate previously inconceivable parts of decision making and offers new possibilities in the use of diagnosis and patient care. From the onset of AI in the healthcare system, there have been complex ethical and legal questions that need to be addressed to guarantee patient safety, equity, and responsible care. This paper identifies the ethical and legal issues surrounding AI decision making in healthcare while underscoring the need for more robust accountability structures. It outlines primary ethical concerns including bias in algorithms, the need for transparency, patient agency, and the legalities of consent and outlines the healthcare system and patient relationship. It further outlines accountability issues that arise from AI decision making that adversely affect individuals and existing accountability response models between the developers, healthcare institutions, and even patients. It does so while leveraging case studies of the use of AI in healthcare to examine from a legal perspective the GDPR and HIPAA, and more recent legislation like the EU’s AI Act. The paper tries to provide recommendations that include ethical frameworks ai ethical guidelines, ai explainability focus, promising also more advanced responsible ai regulations, specialty applying regulations. Future directions researches than offer focus to explainably better, policy stronger, and more guaranteed accountability to ai solutions for patient safety and equity. These addressed concerns would enable better patient rights and welfare protection while allow maximization profits from ai deployment to rest of the healthcare.