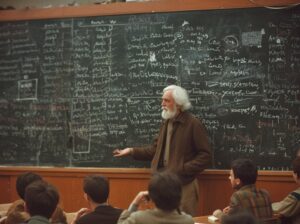

Slow Thinking & Deep Learning; Tversky & Kahnemanas Cabs

This note describes my experience in encouraging the ‘deep learning’ that has long been advocated in the pedagogic literature. My students have typically studied statistics only as part of another discipline, such as economics, business or law. So while they have mostly been aged 17 or over, my approach has necessarily presumed only elementary mathematics and thus should be adaptable to younger people. My main example is a problem story constructed by Amos Tversky in the 1970s to evaluate human beings’ intuitions about statistical inference, and which in 2012 was revisited in a best-seller by his colleague, the Nobel prizewinner Daniel Kahneman. In his book he describes this problem as ‘standard’ and unequivocally answers with a simple fixed-point number. I describe how I have encouraged my students to challenge the certainty of this assertion by identifying ambiguities unexplained in the story; in the process I strive to stimulate individuals’ Thinking, Fast and Slow, to use the title of Kahneman’s book, arguing that his ‘slow thinking’ is a prerequisite of deep learning. While Kahneman more fully describes the problem as one of ‘Bayesian inference’, his story can be de-constructed without reference to the work of Thomas Bayes. However, the bitterest conflicts in the statistical academic community continue to arise from the Bayes-frequentist controversy; this we cannot expect our students to resolve, but we owe it them to explain its causes. So my article includes as an appendix a ‘Bayes Icebreaker’ where I show an analogy between the cab story and an exercise previously described in Teaching Statistics.